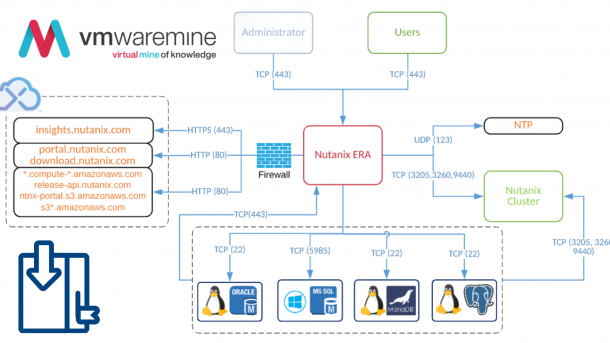

For migration from VMware vSphere to Nutanix AHV, please use Nutanix Move (Free V2V tool, developed by Nutanix)

Today, next part from my Migration to Nutanix AHV series – this time migrate RHEL 7.x from VMware ESXi to Nutanix AHV.

NOTE: Below procedure is applicable for CentOS 7 (see comments 🙂 )

Software requirements:

- Nutanix AOS 4.5.1.2 or newer

- Nutanix AHV – 20151109 or newer

- vSphere 5.X or 6.0

- Remove all snapshots form VM

Let’s get started. Check if you can see VirtIO modules in the system. As you can see on the snippet below, modules are in the system. No need to install and compile.

FYI. all modern Linux kernel (2.6.32 and above) version have VirtIO drivers available by default.

[root@localhost ~]# grep -i virtio /boot/config-`uname -r` CONFIG_VIRTIO_BLK=m CONFIG_SCSI_VIRTIO=m CONFIG_VIRTIO_NET=m CONFIG_VIRTIO_CONSOLE=m CONFIG_HW_RANDOM_VIRTIO=m CONFIG_VIRTIO=m # Virtio drivers CONFIG_VIRTIO_PCI=m CONFIG_VIRTIO_BALLOON=m # CONFIG_VIRTIO_MMIO is not set

Next thing you want to do is to check there are any drivers baked in current kernel. If the output is like below – meaning you have to add VirtIO modules into kernel

[root@localhost ~]# cp /boot/initramfs-'uname -r'.img /tmp/initramfs-'uname -r'.img.gz [root@localhost ~]# zcat /tmp/initramfs-3.10.0-123.el7.x86_64.img | cpio -it | grep virtio 78918 blocks [root@localhost ~]#

For RHEL 6.5 and newer you use dracut to add kernel module. Command below adds virtIO scsi module (SCSI adapter drivers) , virtIO block module (disk driver), virtIO PCI module and virtIO_net (network module)

Make sure you have made backup of current kernel version before you go and execute below.

[root@localhost ~]# dracut --add-drivers "virtio_pci virtio_blk virtio_scsi virtio_net" -f -v /boot/initramfs-`uname -r`.img `uname -r` Executing: /usr/sbin/dracut --add-drivers "virtio_pci virtio_blk virtio_scsi" -f -v /boot/initramfs-3.10.0-123.el7.x86_64.img 3.10.0-123.el7.x86_64 *** Including module: bash *** *** Including module: i18n *** *** Including module: network *** *** Including module: ifcfg *** *** Including module: drm *** *** Including module: plymouth *** *** Including module: dm *** Skipping udev rule: 64-device-mapper.rules Skipping udev rule: 60-persistent-storage-dm.rules Skipping udev rule: 55-dm.rules *** Including module: kernel-modules *** *** Including module: lvm *** Skipping udev rule: 64-device-mapper.rules Skipping udev rule: 56-lvm.rules Skipping udev rule: 60-persistent-storage-lvm.rules *** Including module: fcoe *** *** Including module: fcoe-uefi *** *** Including module: resume *** *** Including module: rootfs-block *** *** Including module: terminfo *** *** Including module: udev-rules *** Skipping udev rule: 91-permissions.rules *** Including module: biosdevname *** *** Including module: systemd *** *** Including module: usrmount *** *** Including module: base *** *** Including module: fs-lib *** *** Including module: shutdown *** *** Including module: uefi-lib *** *** Including modules done *** *** Installing kernel module dependencies and firmware *** *** Installing kernel module dependencies and firmware done *** *** Resolving executable dependencies *** *** Resolving executable dependencies done*** *** Hardlinking files *** *** Hardlinking files done *** *** Stripping files *** *** Stripping files done *** *** Generating early-microcode cpio image *** *** Constructing GenuineIntel.bin **** *** Creating image file *** *** Creating image file done *** [root@localhost ~]#

Let’s verify if we can see drivers in kernel.

[root@localhost ~]# cp /boot/initramfs-'uname -r'.img /tmp/initramfs-'uname -r'.img.gz [root@localhost ~]# zcat /tmp/initramfs-3.10.0-123.el7.x86_64.img.gz | cpio -it | grep virtio usr/lib/modules/3.10.0-123.el7.x86_64/kernel/drivers/virtio/virtio.ko usr/lib/modules/3.10.0-123.el7.x86_64/kernel/drivers/virtio/virtio_ring.ko usr/lib/modules/3.10.0-123.el7.x86_64/kernel/drivers/virtio/virtio_pci.ko usr/lib/modules/3.10.0-123.el7.x86_64/kernel/drivers/block/virtio_blk.ko usr/lib/modules/3.10.0-123.el7.x86_64/kernel/drivers/scsi/virtio_scsi.ko 76509 blocks [root@localhost ~]#

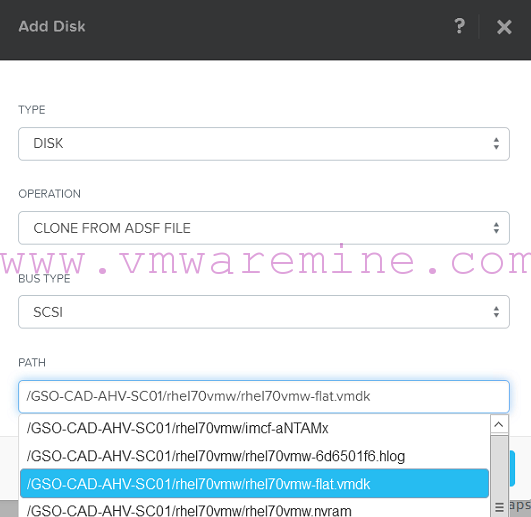

Now we are good to storage vMotion of VMs VMDK to Nutanix AHV cluster. Once is done Power virtual machine down and log in into Prism –> Create new VM –> add disk, in the path specify UNC path to FLAT vmdk file of the VM –> add network adapter and connect it to correct portgroup.

[box type=”warning”] NOTE: use CLONE FROM ADSF FILE operation and with SCSI BUS type [/box]

Acropolis VM disk configuration

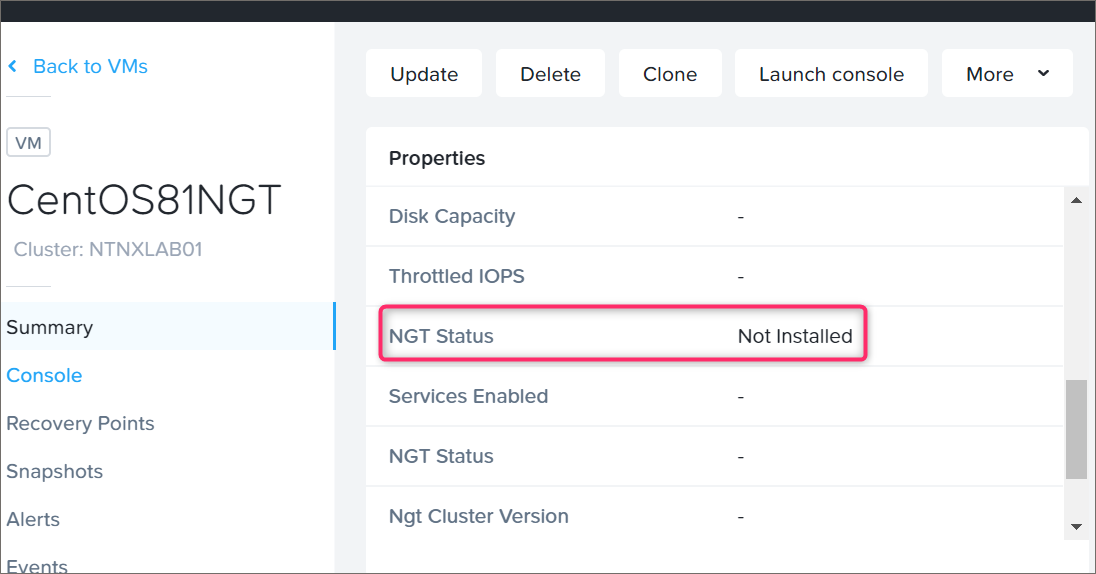

Now, power server on and wait to boot up, verify IP configuration, disk layout and server is ready to run

Migration Series

- Migrate Windows 2012R2 from Citrix XenServer to Nutanix AHV

- Migrate RHEL 6.5 from ESXi to Nutanix AHV

- Migrate Suse Linux from VMware ESXi to Nutanix AHV in minutes

- Migrate Windows 2012R2 server from ESXi to AHV in 5 minutes

- Migrate Linux RHEL 6.5 from XenServer to Nutanix AHV

Thanks much for this, I was looking all over and could not find the answer!!!

I was doing Centos, but same thing basically 😛

A couple of comments…

1. Instead of creating a copy of the initramfs and zcat’ing the image just use “lsinitrd |grep virtio” to see if the drivers are loaded, it’s what the tools is for.

2. It isn’t necessary to specify the path to the initramfs image when adding the drivers

3. These instructions apply to any Linux system using dracut, openSUSE, SUSE Enterprise, etc.

Thanks for the nice write up!

RHEL is not certified on Nutanix… isn’t it?!

Its best to use Nutanix Move (https://www.nutanix.com/products/move) for such tasks

Hi Artur.

Thank you very much for your work. I have tested your procedure with existing RHEL 7 machines and it works correctly, but I have tried to reproduce the same procedure with a RHEL 8 vm and initramfs does not regenerate correctly.

Is it possible to migrate RHEL 8 vms from vmware to Nutanix?. Thanks.