After long break decided to continue vSphere networking series, today 2 x 10Gbps NIC’s with standard and virtual distributed switches.

Playing around with vSphere networking, 10Gbps network connectivity which becomes main stream in modern data centers. If you planning to deploy 10Gbps network my advice is buy VMware vSphere Enterprise Plus license and use Virtual Distributed Switches for networking configuration. vDS has awesome features such as Network IO Control (NIOC) network bandwidth limitation and many other.

Scenario #1 – 2 x 10Gbps – vSphere 4.X and vSphere 5.X – static configuration – rack mounted servers

In scenario I have to design network for 5 different type of traffic. Each of the traffic has different vLAN ID which will helps to improve security and optimize pNIC utilization.

- mgmt – VLANID 10

- vMotion – vLANID 20

- VM network – vLANID 30

- storage NFS – vLANID 40

- FT (fault tolerance) – Vlanid 50

Above configuration is my custom design, it follows networking best practices and security standards, feel free to use it in your deployments

- Promiscuous mode – Reject

- MAC address changes – Reject

- Forget Transmits – Reject

- Network failover detection – link status only

- Notify switches – Yes

- Failback – No

Scenario #2 – 2 x 10Gbps – vSphere 4.X and vSphere 5.X – Dynamic with NIOC and LBT – rack mounted serves

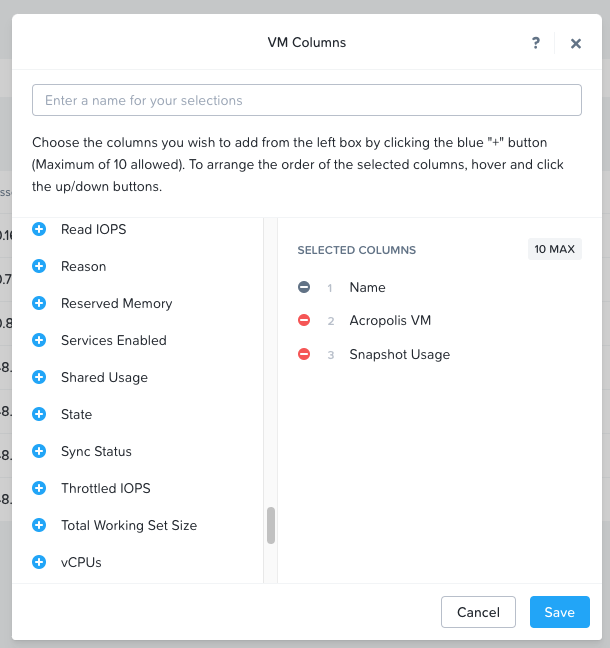

In scenario I have to design network for 5 different type of traffic. This time, for network optimization I used virtual distributed switches with NIOC enabled. Heaving NIOC enabled helping to optimized NIC utilization and prioritize network traffic (see table below). Both dvuplinks are in active state on all portgroups and each network traffic type has shares applied (value depending on traffic priority and bandwidth consumption (see below table). LBT – Load Based Teaming policy based on physical NIC utilization, when NIC utilization reach 75% of bandwidth then policy direct traffic to second available NIC.

NOTE: shares works only when physical NIC’s are saturated, otherwise network traffic consume as much as it’s available.

Network traffic types:

- mgmt – VLANID 10

- vMotion – vLANID 20

- VM network – vLANID 30

- storage NFS – vLANID 40

- FT (fault tolerance) – Vlanid 50

How to do bandwidth calculation based on shares.

- Summarize total number of shares (5+10+15+10+10)=50

- number of shares for traffic type – see table below

- uplink speed – 10Gbps

(number of shares for traffic type/total number of shares ) * uplink speed = available bandwidth per uplink

Example for vMotion traffic.

(10/55)*10000=1818,2 Mbps -is a maximum throughput for vMotion (per NIC) when physical NICs are saturated.

Few tips at the end.

- If you go for 10Gbps use vSphere Enterprise + license

- Use virtual distributed switches and enable NIOC

- With vSphere 4 and vSphere 5 make sure your DB is well protected (lost of vCenter DB and further DB recreation will cause outage on all virtual networks

[box type=”info”] See links below for different networking configuration

ESX and ESXi networking configuration for 4 NICs on standard and distributed switches

ESX and ESXi networking configuration for 6 NICs on standard and distributed switches

ESX and ESXi networking configuration for 10 NICs on standard and distibuted switches

ESX and ESXi networking configuration for 4 x10 Gbps NICs on standard and distributed switches

ESX and ESXi networking configuration for 2 x 10 Gbps NICs on standard and distributed switches[/box]

Would you consider these designs using only 2 x 1GB interfaces?

We are about to set up a system based on a Dell C6100 which has 4 nodes. Each node has 48GB RAM and 4 1GB NICs – dual-port onboard and dual-port PCIe. This 4 host cluster will share, then, 16 physical NICs. We are considering dual physical switches for network traffic and dual switches for iSCSI. What would the topology look like in this case? We are planning on using ESXi 5.1 Enterprise +.

Will this work with 2 4500-x swtiches on VSS?

Can one use the latter distributed switch setup with the VMware iSCSI software adapter? I see you have “storage” on the vDS but if both 10BGe dvuplinks are active in the storage portgroup is not compliant.

circle with marked 802.1q trunked maybe better off removed. it seems to indicate port-channel configuration when in fact it just means each of the network connection is trunked.