How to expand vSphere cluster on Nutanix

Few days ago I was helping on of my colleagues to expand existing vSphere cluster (based on IvyBridge processors) on Nutanix by adding new Nutanix nodes with Hasswell processors.

Here is the scenario. Existing Nutanix and vSphere cluster is based on IvyBridge processors. After sometime customer would like to expand its existing Nutanix deployment by adding several Nutanix and vSphere nodes (new nodes have Intel Haswell processors). Almost all Nutanix nodes, from current Nutanix offering, are based on Intel Haswell. As you know, if you have to expand existing vSphere cluster by adding hardware with newer CPU generation – in this case was Intel Haswell. You have to have EVC baseline enabled to be able to vMotion VM across “old” and new hosts in cluster or across clusters. Fortunately, EVC was enabled on existing cluster so adding nodes with Haswell on board was not really difficult however you should remember few things:

- Nutanix Operating System must be the same version as on “old” Nutanix cluster.

- vSphere version have to be the same as on the “old” vSphere cluster

- EVC baseline have to be enabled on “old” vSphere cluster before you add new nodes into.

In addition to above you should follow standard VMware requirements and best practices. General guidance is expand vSphere cluster before Nutanix cluster – it will make process easier and faster. However, if you have extended Nutanix cluster first then it is still possible to expand vSphere cluster but it will take more time.

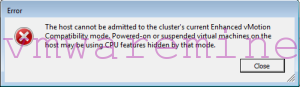

Both procedures has common steps – like stop CVM before you can add host into vSphere cluster. You have to stop CVM because it uses Haswell processor features which are not compatible with ivyBridge EVC baseline. You can try to add vSphere node to cluster with running CVM but you will get an error:

Cluster expansion order. First vSphere cluster and Nutanix cluster afterwards .

- Deploy Nutanix Operating System and vSphere on new nodes

- Stop CVM on new nodes only

- Enter new nodes into maintenance mode

- Add new Nutanix nodes with vSphere to vSphere cluster

- Start CVMs on all new nodes

- Wait for CVM to boot up correctly

- Expand Nutanix cluster.

Cluster expansion order. First Nutanix cluster and vSphere cluster afterwards .

I’m assuming here that Nutanix cluster has been expanded – that’s why you are here 🙂 .

[box type=”warning”] NOTE: run below procedure on one node at time [/box]

- Stop CVM on only one node from pool of new nodes

- Enter vSphere host in maintenance mode

- Add host to vSphere cluster

- Start CVM

- Wait for CVM to boot up

- Log in to Nutanix cluster and run cluster status – make sure that all services are in UP status on freshly boot up CVM before you proceed with next host.

nutanix@NTNX-14SM15040014-A-CVM:10.1.222.60:~$ cluster status 2015-06-08 10:54:48 INFO cluster:1798 Executing action status on SVMs 10.1.222.60,10.1.222.61,10.1.222.62 The state of the cluster: start Lockdown mode: Disabled CVM: 10.1.222.60 Up Zeus UP [3582, 3605, 3606, 3610, 3677, 3690] Scavenger UP [4557, 4583, 4584, 4627] SSLTerminator UP [4653, 4675, 4676, 4749] Hyperint UP [19083, 19105, 19106, 19109, 19116, 19120] Medusa UP [5181, 5208, 5209, 5210, 5439] DynamicRingChanger UP [6080, 6110, 6111, 6189] Pithos UP [6104, 6160, 6161, 6197] Stargate UP [6129, 6168, 6169, 6198, 6199] Cerebro UP [6181, 6263, 6264, 23996] Chronos UP [6210, 6258, 6259, 6429] Curator UP [6266, 6400, 6401, 6481] Prism UP [6299, 6388, 6389, 6465, 24293, 24416] CIM UP [6367, 6432, 6433, 6456] AlertManager UP [6402, 6446, 6447, 6496] Arithmos UP [6480, 6532, 6533, 6641] SysStatCollector UP [6555, 6593, 6594, 6775] Tunnel UP [6608, 6647, 6648] ClusterHealth UP [6655, 6694, 6695, 6875, 6899, 6900, 7095, 7096, 7097, 7098] Janus UP [6670, 6756, 6757, 6938] NutanixGuestTools UP [6723, 6791, 6792] - Repeat procedure for next node

As you can see, much easier and quicker is to expand vSphere cluster before you add new nodes into Nutanix cluster.